Buddiotte

Android Robot for Real-Time Gesture-Based Gameplay

Client Overview

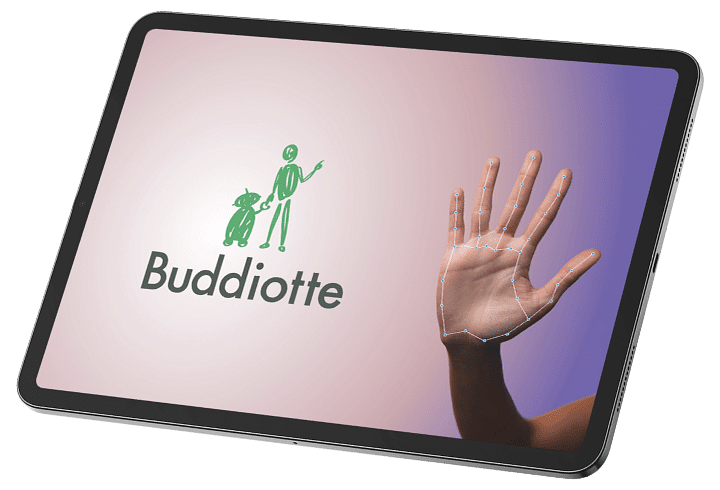

Buddiotte operates through a dual-screen setup, where one screen detects the player's hand gesture using a Convolutional Neural Network (CNN). The system interprets whether the user shows rock, paper, or scissors, while the robot simultaneously generates its own gesture based on predefined algorithms. The two inputs are then compared, and the system determines the winner in real time.

This project not only highlights the power of machine learning for gesture recognition but also demonstrates the use of AI in creating engaging human-robot interactions. The combination of real-time detection and robotic decision-making makes Buddiotte an impressive example of AI and robotics merging for a more technically challenging task.

Business type:

Technologies:

Industry:

Robotics

1

Communication between multiple devices on low internet bandwidth

Stable communication with minimal delay was essential. However, the challenge arose from low internet bandwidth, which could disrupt data flow and affect the synchronization between gesture detection and the robot’s response.

2

To detect hand gestures correctly

Any misinterpretation could lead to incorrect outcomes. The system needed to recognize multiple hand gestures in various lighting conditions and positions, which was a significant technical hurdle.

3

Programming language barrier

We had to manually translate the Python code into C++ and Kotlin. Without direct access to AI libraries, we had to create custom algorithms to handle gesture detection and decision-making within the Android environment.

Real-Time Gesture Recognition

Advanced AI algorithms using CNNs for accurate hand gesture detection and recognition, ensuring fast and reliable gameplay.

Adaptive Learning

Machine learning techniques that allowed the system to learn from user interactions, improving its gesture recognition and decision-making over time.

Low Latency Communication

Utilize the MQTT protocol to ensure efficient data transfer with minimal delays, crucial for real-time interactions between devices.

Cross-Platform Compatibility

Design the app to be compatible with various Android devices, ensuring a seamless experience across different hardware specifications.

1

Multi-round game session support

2

Visual countdown timer before gesture capture

3

Scoreboard display for user vs. robot

4

Sound and haptic feedback for game actions

5

Gesture capture history and accuracy stats

6

Light/Dark mode UI toggle

7

Gesture demonstration/tutorial mode

8

Auto-reset and replay functionality

9

Offline mode gameplay capability

10

Battery and connectivity status indicator

Gesture recognition

Convolutional Neural Network (CNN) model was used to achieve high-precision gesture recognition. It is very effective in image classification tasks. We trained the CNN model on a diverse dataset of hand gestures to ensure it could correctly recognize different hand shapes in real time, regardless of variations in hand orientation, lighting, or distance from the camera.

Live hand tracking

We implemented a live hand-tracking system that monitored hand movements in real time using a combination of AI and computer vision techniques. This system captured the user's hand gestures instantaneously, allowing the robot to detect and process the gestures as they occurred, ensuring a responsive and interactive experience.

MQTT protocol

MQTT is ideal for low-bandwidth environments, as it ensures efficient, real-time data transmission between devices. The protocol’s low overhead and publish/subscribe messaging structure streamlined communication between the robot and the user.

Interactive User Interface

To make the game user-friendly, we designed a simple and intuitive user interface (UI). The UI featured a game menu with Play, Pause, and Stop buttons, along with a result display screen that showed the winner after each round. This streamlined UI ensured users could easily control and track the game, enhancing overall engagement and usability.

We successfully demonstrated the integration of AI, robotics, and real-time hand gesture recognition in a fun and interactive gaming experience. The project achieved its goal of creating a fully functional Rock-Paper-Scissors game where the robot intelligently responded to user gestures. By overcoming technical hurdles, we delivered a seamless and responsive application. This innovative solution highlights the potential of AI-driven robots in entertainment and user interaction.

Merging Robotics & Vision for Real-Time Gameplay